On one hand automotive industry’s largest researched field, Autonomous Driving is leading to revolutionary shift by teaching the cars how to drive on its own in both controlled and uncontrolled environments. On the other hand, it is almost impossible to develop cars that can surpass human driving without a powerful and a reliable sensor system, especially when driving in adverse climatic conditions such as rain and snow.

A camera sensor has become a fundamental and the most important part of the sensor set system used in Autonomous cars. It is through a camera, a car can visually understand what is around it, something identical to what eyes do to humans. Hence, its reliability is key for safer and better driving.

Existing driver assistance systems work exceptionally well in clear weather conditions. However, it is unavoidable that when driving through adverse climatic conditions such as rain or snowfall, contaminants such as raindrop, snowflakes, dirt or soil adhere to the lens of the camera and the background visibility is hampered severely. Especially when raindrops adhere to the lens of the camera, the background is blurred and there is either loss of information or misinterpretation of data. Consequently, this may lead to the failure of various driver assistance features such as pedestrian detection, object detection, traffic sign detection and many others. Thus, it has to be made sure that the camera delivers clear and complete information for better and safe driving.

Figure 1: Image captured from a camera with raindrops adhered on its lens. It can be observed how the background visibility is hampered and blurred.

A solution to avoid the above-stated problem is to ensure that there should be no compromise in the quality of the image received from a camera sensor. This can be achieved by developing a software to post process images received from the camera and reconstruct the images if they are deformed. However, in order to regain the information lost, these regions have to be first detected in the deformed images. At EDAG Electronics, a software based on Artificial Intelligence is developed that can detect adherent raindrops on single images. A software solution to the above stated problem is a straightforward and provides a permanent and a reliable solution. Additionally, it is hardware independent and a single software can be used in multiple cameras placed in a car (i.e., front-view, top-view, rear-view, surround-view cameras).

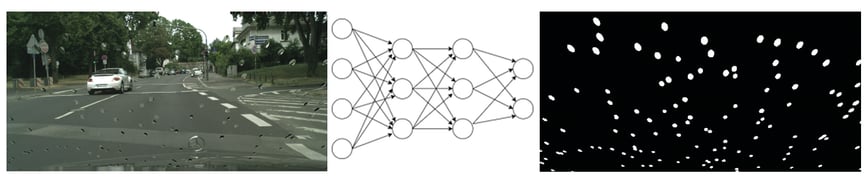

The raindrop detection system developed is called as RDNet (Raindrop Detection Network). It takes in an image containing adherent raindrops, processes the image, and generates a binary mask containing precise location information of the adherent raindrops in the images. The raindrops are segmented into white regions and the non-raindrop (background) regions are segmented as black.

Figure 2: A pictorial illustration of the raindrop detection system (RDNet). Images from left: Input image with raindrops adhered on it, middle: Model, right: Binary mask generated by the model that contains precise raindrop location information.

RDNet – The Raindrop Detection Network

The RDNet developed to detect raindrops is a type of neural network called as Convolution Neural Network (CNN) that is specially designed to work with images. A CNN can be trained to identify and learn the features of a raindrop precisely, rather than using the conventional feature description techniques. Owing to significant variations in the properties of raindrops on the ground of its size, shape and texture, simple image segmentation techniques based on traditional feature description methods are not sufficient to produce accurate results for a complex use case such as Autonomous Driving.

A CNN declines the traditional programming pattern and rather finds its basis on learning from examples. Instead of manually defining the features of a raindrop one by one and forming an algorithm, a few convolution layers put together forming a neural network can be trained on a raindrop dataset and the network learns the features of the raindrops and the features of the continuously varying background on its own.

A CNN is a special form of supervised learning method where the network learns from a pair of inputs. The training data consists of both, the input images with raindrops and the desired output also known as binary labels. The network when trained on such a dataset learns to associate the input image with the desired output (labels). Once the network is trained, the model gains the ability to detect and predict raindrops on unseen examples based on its learning.

The biggest challenge in any deep learning task is the data availability. To train the RDNet, a special raindrop dataset was used where the raindrops are generated synthetically. The dataset contains 35700 single training images and corresponding binary labels. In addition, to make it more realistic and practical, the background of these images are of urban areas under cloudy weather conditions.

Figure 3 : Sample of the image with adherent raindrops used to train the network. The raindrops are synthetically generated on this background.

By integrating the RDNet with a reconstruction software such as the DiFoRem (another in-house software developed to reconstruct portions of images occluded by contaminants), the deformed regions of the images can be reconstructed. The DiFoRem uses the binary masks generated by the RDNet, to produce clean and clear images with improved background visibility. The RDNet will be further expanded in the future to also detect other forms of contaminants such as dirt, snow, soil etc. The detections made using the RDNet are shown below:

Figure 4: The detections made by using the RDNet. Images from top: Raindrop image, middle: The predicted mask and bottom: Raindrops detected by the network are highlighted in blue.

AI has paved way for turning Autonomous cars that was once only a concept now into reality. It enables the vehicle to perceive, hear and make decisions just like a human. At EDAG, we believe in “Always a little different and always a little ahead of time”. Thus, the solutions to define and develop vehicle technologies that ensure better and safer driving are always future oriented. We are happy to accompany our customers with futuristic solutions in making Autonomous Driving a reality.

Our team in Lindau / Ulm is working intensively on tasks in the areas of Deep Learning and Computer Vision. If you are interested in these technologies or in other application areas of AI, Malavika Venugopal, AI software developer, will be happy to help you.

For more information, download the white paper "How a combination of AI and camera systems clears the way for the future of autonomous driving".