ADAS. When you first hear it, it sounds a bit like ADHD. But unlike the abbreviation, this has nothing at all to do with an attention deficit problem. On the contrary, ADAS – Advanced Driver Assistance Systems – are characterised by a high level of attention, and always react in response to inattention on the part of the driver.

Artificial intelligence (AI) has long since found its way into many areas of our lives. Using large data volumes, AI can, for instance, automatically supply the supermarket with fresh food, so that the shelves are full again in the morning, it can also be used to optimise our photos, give us tips for endurance workouts, or to plan the quickest route through congested cities. Dismissed as fiction or nonsense just a few years ago, this technology is now also playing an increasingly important role in the automotive industry.

For many people, it is still very difficult to imagine that, instead of having to drive themselves, they might hand over all responsibility to a number of different systems. Who actually guarantees the safety of these systems? How can they be validated? What steps can be taken to guarantee that, as precursors of autonomous driving, ADAS systems will work in every country – not just on manageable German country roads, but also in chaotic megacities? We, the EDAG Group's experts, make sure that people's trust in such systems is justified, and that they function even in difficult situations. No matter where or when. To this end, we develop and validate ADAS hardware/software systems in a wide variety of areas, and work with our customers to derive definite improvements.

A powerful combination – ADAS and artificial intelligence

Without AI, or artificial intelligence, autonomous driving is simply not possible Among other things, it plays a central role. What is known as "deep learning" consists of deep neural networks. These are modelled on the human brain and, for instance, make associations with their environment, from which they infer their tasks and actions.

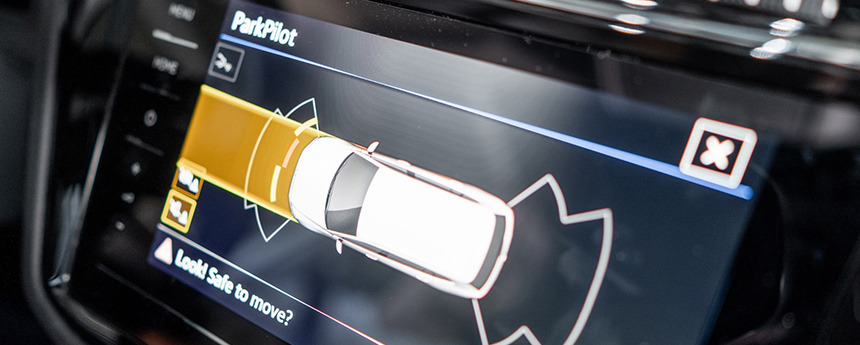

Driver assistance systems have been designed to simplify and even automate driving. Driving situations can be electronically supported and decisions simplified, or even made for the driver. Emergency brake assistant, tyre pressure control system, adaptive cruise control (ACC), parking assistant (automatic parking), blind spot monitor, rear view camera, road sign identification, lane departure warning system. You already know about those.

EDAG Electronics has been assisting automobile manufacturers with developments related to piloted driving for a long time. For example, an EDAG Group team of around 60 functional safety and software development experts helped a major OEM and TIER to take a next generation vehicle towards level 3. Level 3? What does this mean?

The automation of cars is divided into Level 0 to Level 5:

- Level 0

The person drives without the influence of sensors. Only information is given. - Level 1: assisted driving

Assistance systems, such as an automatic lane keeping assistant or cruise control, assist the driver in certain tasks. He must have constant control over his vehicle and the traffic. - Level 2: semi-automated driving

The vehicle can carry out driving tasks independently at times, but needs human assistance in unforeseen situations. For example, the car has a lane departure warning system, automatic cruise control and an overtaking function, or can park independently. - Level 3: automated driving

Here, the driver can sometimes lay back and relax. The vehicle can drive independently on the motorway, where there is no oncoming traffic, no intersections or pedestrians. The driver must always be able to intervene in dangerous situations. - Level 4: fully automated driving

On certain routes, the car takes over the driving completely independently. The driver no longer needs to pay attention to the traffic and his surroundings. The vehicle must also be able to detect traffic lights and other moving cars without errors under real-life conditions. Theoretically, the car could be on the road without anybody in it. - Level 5: autonomous driving

With fully autonomous driving, there is no longer a driver. Everyone in the car is a passenger, and the level 5 vehicle drives fully autonomously. It can also carry out complex driving manoeuvres such as dealing with junctions or going round roundabouts independently. An autonomous car does not need a steering wheel, because – in the true sense of the word – there is no driver who could intervene and steer in a dangerous situation.

All a question of development and validation

To ensure that the systems deliver in practice what they promise in theory, more and more OEMs are relying on the experts at the EDAG Group. As the level of automation rises, the steps involved in the approval process become increasingly complex, especially when it comes to the aim of establishing a vehicle for global use on the market. With its international line-up, EDAG is a strong partner for such complex challenges.

One example of this is the blind spot monitor, which is radar operated in the USA. In this country, the emphasis is more on ultrasound, as this technology is just as reliable but at a fraction of the cost. However, differences between countries apply not only to rules and regulations, but also to the way people drive. A good example of this is the ACC system (adaptive cruise control ): experience has shown that drivers in the USA tend to brake and accelerate smoothly and slowly, whereas Germans brake and accelerate far more sharply. In this context, EDAG checks how the software is adjusted, what tests are necessary, and then carries them out.

Greater efficiency due to an innovative EDAG tool

Drive – brake – drive – brake – it is hard to believe that, simple as it sounds, testing braking and acceleration cues is actually a demanding and cost-intensive practice. A brake robot is used to carry out this type of safety check. A complex procedure. And one for which the EDAG experts found a smart alternative: a software tool developed in-house simulates an ACC control unit (including plausibility checks such as the dynamic check sum, rolling counter) and ensures that pre-defined and reproducible braking requirements are carried out, and in this way, the vehicle's performance is validated. The tool is used worldwide.

Performance drive to validate the traffic sign recognition assistant

Here is a further example of which methods can be applied to make this type of validation more efficient:

You are travelling along a country road, and lost in thought, overlook a speed limit. The traffic sign assistant can save your driving licence here because it recognises road signs and relays relevant information to the driver.

From a technical point of view, there are two ways of constructing this type of system. The currently valid signs are identified either with the help of the navigation device's position sensor or by means of a camera with image recognition software, which is positioned at the front of the car and can recognise and analyse signs.

The automotive industry makes use of both alternatives. The task of the driver assistance experts at EDAG Electronics is to work out whether it is possible to combine the two possibilities. The thing to do is find out the added value and determine when data from the navigation system is really useful, when redundant, and when it serves no purpose at all.

Here again, the solution lies in an in-house development. With the help of a high resolution camera, the software tool records all test drives carried out. At the same time, it also keeps a record of all the traffic signs recognised by the vehicle. At the end of these performance drives, the EDAG software automatically assesses whether the driver assistance system made the right decisions or needs to be improved upon. Not only an ideal way of coordinating the two technological approaches (GPS and image recognition), but also of judging what traffic signs should be displayed in what order. There is after all no need for every single one of the huge number of signs that a car passes to be displayed.

When you have reached your destination, the automated checks carried out by the parking system ensure that you can get out easily once your vehicle has parked itself. The idea here is for laser distance sensors and test automation to record the parking position of a piloted vehicle, in this way checking the performance of the parking system. All a question of validation.

Our lives in artificial hands – ADAS can now get us safely through the fog

People can already rely on driver assistance systems and on the fact that they will warn them of danger. But does that apply to all weather conditions? The thought of mass pile-ups caused by thick fog, with countless cars crashing into each other, and people possibly dying, is a nightmare.

Camera systems in vehicles recognise all sorts of things, including traffic signs, road markings and other road users. Weather effects, for instance fog, can make the pictures very cloudy. Conventional algorithms come up against their limits here, and present a considerable safety risk. In order to change this in the future, we have developed a new model in which images from a camera are reconstructed or “defogged” using artificial intelligence.

So that it will be possible in the future to assess a critical situation even in difficult weather conditions, we are training a special form of neural networking, a “convolutional neural network”. This model is being trained by means of the “supervised learning” method – and by using a graphic card server to accelerate calculation and analysis – after which it is able to extract characteristics of an image and then restore lost colours and contrasts in the picture.

To be able to learn, artificial intelligence needs large data volumes. To this end, we generated 200,000 synthetic snapshots with the IPG Carmaker simulation tool. A total of 22,222 single images, taken from three different angles and each with nine different fog densities were created using a simulator. Snapshots of car journeys in the city and overland, with an authentic infrastructure. This means that different fog densities can be generated precisely, consistently and far more quickly. For supervised learning, we also need a fog-free reference image.

Left: foggy snapshot, centre: “transmission map”: grey-value image representing the light attenuation with full pixel accuracy, right: defogged picture

This development is an important milestone towards autonomous driving. Only if the traffic situation is correctly assessed by the driver assistance systems can the safety of all road users be guaranteed, and lives possibly also saved. And this will only work with the use of artificial intelligence.

We take on the challenge of improving mobility

The EDAG Group works tirelessly on innovations for the mobility of tomorrow. With the EDAG CityBot, we will reach the next, new and sustainable level in urban space To make the city of the future clean, safe, worth living in, friendly, quieter – and simply smarter.

Our swarm-intelligent, multifunctional robot vehicle is equipped with a fuel cell drive, and can be operated around the clock if required. Depending on requirements, it can be converted by fitting add-on modules. It can serve as a passenger cell for instance, as a cargo carrier, or a city cleaning vehicle. Just the thing for the city of the future. And fully autonomous.

It goes without saying that we combine our engineering competencies in the fields of Vehicle Engineering, Electrics/Electronics and Production Solutions not just for our own projects, such as the CityBot, but especially for your tasks in the field of autonomous driving and artificial intelligence. Jacek Burger, Head of Embedded Systems & Computer Vision/AI, will be happy to explain in detail what added value we get thanks to innovative software and sensor technology.